void main( )

Are we there yet?

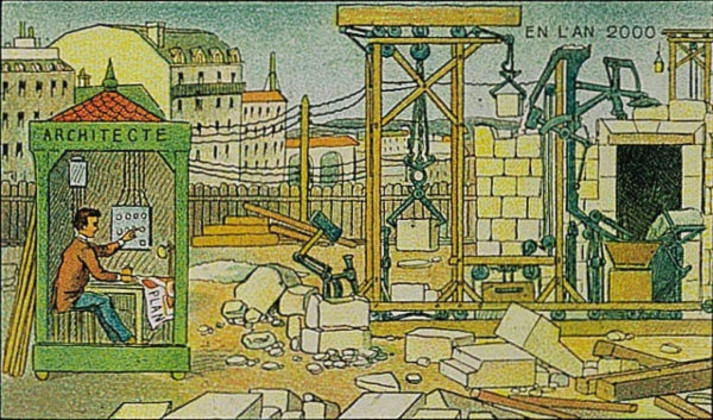

A series of futuristic images, with the first series produced for the 1900 Exhibition Universelle in Paris, was depicting what it would be like to live in the then distant year of 2000 - aptly named En L’an 2000. Some of these predictions fell way off the mark, unfortunately aerial firemen in 19th century dress with wings for flying do not aid us in times of emergency nor do we have the bizarrely awesome whale-bus that would be in the crosshairs of PETA. Nonetheless, some predictions such as electric scrubbing, hearing the newspaper, and correspondence cinema have become part of our lives in the forms of Roomba, podcasts and Skype/Facetime respectively.

However, one prediction in particular peaks our curiosity due to its title: electrical construction site. An architect is depicted as overseeing the automated construction of a building with the help of specialized machinery on the construction site. One machine dimension the stone whereas another machine lifts it into its place in the wall, which a third machine mixes and applies a layer of mortar. The whole process is orchestrated by the architect, who somehow manages to drive all the data from a plan drawing according to the creator of the utopian image. Let’s reiterate the question as this question is always asked fervently several times; are we there yet?

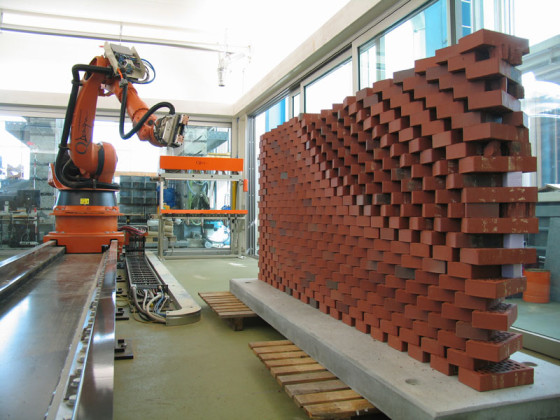

Short answer: not really – actually only a very, very, very few of us are on the way. In the second installment of the trilogy, we had left off at the invention of numerical control. Although digital computational models, computer numerical control applications and human computer interaction in the realm of architecture were investigated thoroughly in ‘70s, the mainstream attention is considerably recent. Close relatives of our beloved “orange” were first utilized in Gramazio&Kohler’s elective course in 2006 at ETH Zurich, The Programmed Wall. Their first global impact would be in the Venice Biennale of 2008 – the Swiss Pavilion. Let’s pace ourselves and take a step back to explore how this phenomenon came to being and tie everything in our gradual progression.

Gramazio&Kohler’s elective course in 2006 set out to define a new role for the architect and the architecture

“Being there” requires a new approach in the design process itself. It requires the redefinition of the architect. He has to drop his false belief that the architectural truth is instinctive and inherent to him. Rather than relying solely on a singular top-down problem solution, he has to incorporate an additional bottom-up strategy to populate solutions to the design problem he framed, with materiality and fabrication know-how embedded within the process from the start. This computational process requires algorithms, a set of instructions given by humans to be performed by a computer.1 Instead of channeling an idea directly into form, it promotes channeling an idea into a process and eventually into a form. In The City of Tomorrow and Its Planning, Le Corbusier contrast man’s way with the pack-donkey’s and claims that with the advent of straight line and the right angle, man conquers nature. He rejected, and pitied the medieval city – favoring the straight lines in the grid of Roman cities. What if the pack donkey was more adept at designing a road, as it knew how to navigate with a load better through the terrain? Shouldn’t we take its expertise into account?

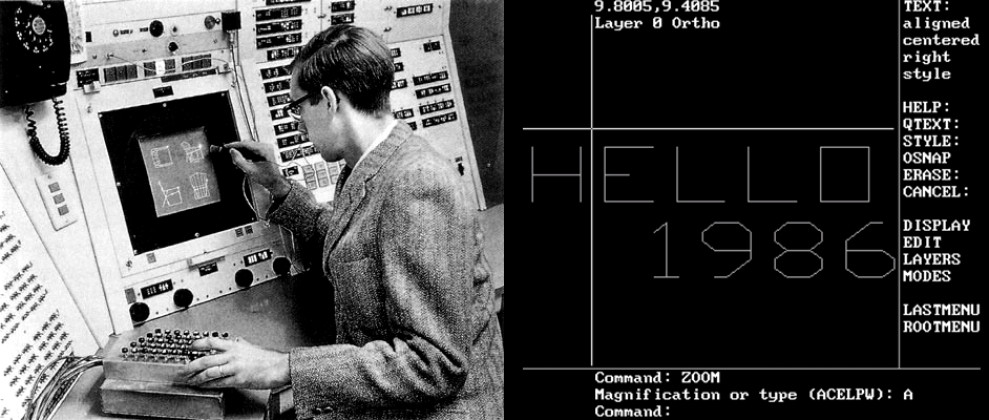

Architects were involved in human computer interaction experiments in the ‘70s as mentioned before. Nicholas Negroponte, an architect, spearheaded the Architecture Machine Group which has transformed into the MIT Media Lab – one of the leading institutions researching various aspects of the interaction between people and technology. Christopher Alexander’s books were essential in terms of looking at computer programming under a new light and contributed greatly towards the realization of modern object-oriented programming languages such as C++. So what happened that altered the conception of computers for the greater mass of architects?

Ivan Sutherland’s 1963 Sketchpad vs. 1986 Autocad R2. It’s ironic to see the 23 year predecessor to be superior and even include some functions that would not be available in AutoCAD until early 21st century. More on Sketchpad in future issues.

Poorly developed CAD happened. Designers make the common mistake of confusing computation with computerization. Computerization is the direct translation of a mechanical system to the digital environment with minor or no alterations for enhanced efficiency. It is the act of entering, processing or storing information. On the other hand, computation is the procedure of calculating through mathematical and/or logical methods as discussed previously. The common architect’s ego prevented the architect’s design methodology to transform with the possibilities brought by the power of computation, as the transformation required the architect to go out of his comfort zone and the architect’s ego was not ready. Uncompromising the hard coded boundaries, the ego dramatically limited the malleability of working with the new paradigm. Thank you Roark-like architects that knew it all.

As architects instinctively clung onto the methods and tools they were accustomed to, failing to go far enough in thinking outside the confines of their current technological milieu, software developers tailored CAD according to the mundane demands in order to maximize their profits in short-term. With the introduction of CAD, computers replaced manual drafting tools. Connotative notion of tool implies control, power, dominance and skill. However, this is not the case with computers in the common architecture practice. As the architects utilize it, computers are merely the digitized sum of different sets of pens, millions of brushes with millions of colors, rulers and protractors with infinite length, and the perfect scapegoat to blame in the case of an emergency. Their capacity is overlooked, underestimated.

In Second Wave, all that was solid melt into air. Third Wave witnessed all that was solid melt into information.2 Vast amount of accessible information accelerates everything, including architecture. Architecture study based on the polytechnic schools must redefine themselves as their curriculum gets heftier each passing day, making it practically impossible to prepare students adequately. Mass-education trends cannot be sustained anymore. Compared to architects of prior waves, the student is offered with a variety of lessons and tracks to choose from instead of a strict curriculum – yet, this is barely enough. That is why the concept of working/studying had to be redefined. What used to signify a room or building which provides the space and the tool required for manufacturing goods have become synonymous with theme/method specific, dissociated from a fixed space, and a fast paced approach of sharing knowledge/experience. Workshops that range from intra-class to international, half-day to four weeks, theoretical to labor intensive have started popping up everywhere to correspond to the need for information.

Exposure to vast amount of information triggers one of the two states for the architect. In the first, the architect may choose to focus on one subject and become the hyper-specialist in a niche. Thus, the architect has to collaborate interdisciplinary with more people toward the realization of a project. On the other hand, the architect can branch out between professions, becoming transdisciplinary himself and fill in-between. Actually every professional, not only architects, will eventually transcend their profession as information becomes more accessible by day, allowing individuals to try taking on multiple roles. There will be a shift in the consumer / producer dichotomy. Coining consumer with producer, Alvin Toffler anticipates that prosumerism will surge as the defining character of the Third Wave and we have seen its first steps as the Do-It-Yourself (DIY) movement or its extension – maker culture, have become quite popular. If anyone can build something on their own, then why should not architect build on his own as well?

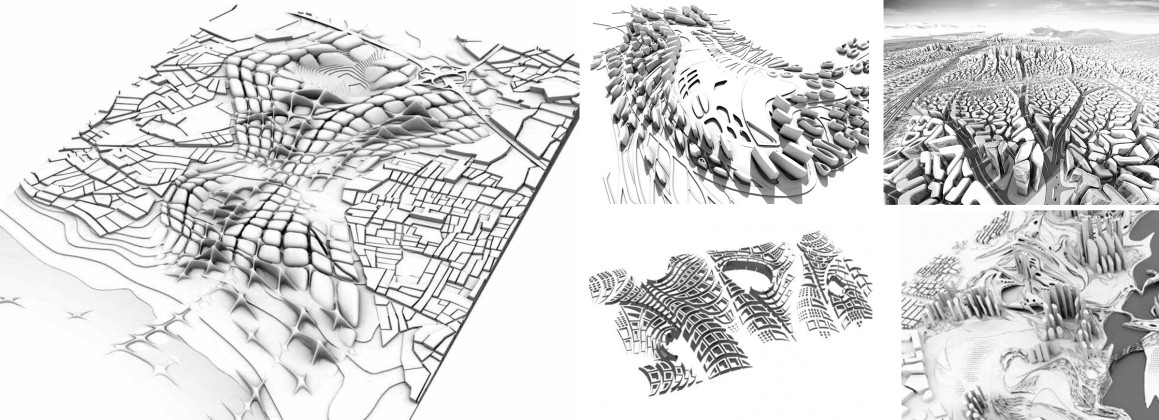

The original (Zaha Hadid Architect’s Proposal for Kartal masterplan) and the knock-offs. Just make every corner filleted, take a wide angle high contrast clay render and voila!

Architect as a tool builder can, and should define his own generative components and their transformational behavior to tap into the computational prowess of computers at his disposal. Tooling allows connections among research, design, depiction and making that have not existed since specialization began during the Renaissance.3 However, tooling without thoroughly designing the process causes an influx of cheap imitations, and furthermore it evolves into a dictating style. If the parameters are taken out of their contexts, the design loses its qualitative values. Designers amazed by processes performed by algorithmic procedures, which they have no control or knowledge of, are doomed to creating catastrophic results. Some even take the next step and try to create their own –isms.4

“Machines will lead to a new order both of work and of leisure.”5 Although this referred to a lifestyle organized in accordance to the machines in its original context, reinterpreted, our interaction with machines will lead to a new order. We have surpassed the point of confining to the standards of machines as we established mass-customization through automation. The industry is now seeking methods to optimize the production through the collaboration of people with the machines to utilize both sides’ superiorities. As architects, we are still trying adapt mass-customization through automation. Apparently we have a lot of catching up to do.

The 1910 prediction of the year 2000 is impeccably spot on for some of us, yet it is so far for the architects unwilling to adapt with the new Wave. We are not there yet, but isn’t journey the real reward?

Notes:

1 Computers are not the only algorithmic tools as Antoni Gaudi and Frei Otto have built some of the well-known computational analogue precursors (computational physical models).

2 Whereas the original phrase is from Karl Marx’s Communist Manifesto, Marcos Novak has revised it for the contemporary times.

3 Kieran, S., & Timberlake, J. (2003). Refabricating Architecture: How Manufacturing Methodologies Are Poised to Transform Building Construction. New York: McGraw-Hill.

4 Obviously we will talk about the dark side, Patrik Schumacher and Parametricism, in the future.

5 Corbusier, L. (1929). The City of Tomorrow and its Planning. New York: Dover Publications.

Related Content:

-

Design Education in the Age of Artificial Intelligence

Bilge Bal and Bahar Avanoğlu talked with Mario Carpo on the first year design studios as the foundation of architectural education and the role of computational design tools in the field.

-

Architecture Ablaze

'If cold, then cold as a block of ice. If hot, then hot as a blazing wing. Architecture must blaze.'

-

How to Train Your Robot

Typical information flow from design to execution is taken granted as linear, rather one-directional.

-

Does 'Orange' Dream of Electric Bricks?

Recently, we are very much exposed to robotically fabricated pavilions popping up all around the world, most of which are iterations of the applied research projects started a decade ago.

-

private class Architect { }

Alberti's approach in design fabrication is still a major part of another issue, but rather lets us focus on how his conception of the architect fares in pre-Industrial Revolution

-

helloWorld

'Who are we as architects?' we found ourselves pondering, across a half-a-ton bright orange robotic arm looming over the very little space we have as our digital fabrication workshop

08.05.2017

08.05.2017