How to Train Your Robot*

Typical information flow from design to execution is taken granted as linear, rather one-directional. Often, a G-Code with lines and lines of comprehensive set of instructions is the final step towards the fabrication of a procedure. The instant behavior of the material, the physical conditions of the working environment or the existence of a person does neither provide any feedback nor become a part of design process in unidirectional digital flow. Yet, this is not the case when humans make something. Embodied cognition is essential in the flow of making. For most craftsman and artisan, the tools they use and the materials they interact have become a natural extension of their bodies – upgraded limbs that are modified towards excellence at a particular task. What if we stopped treating robots process data irreversibly like punch cards of the Jacquard Loom? What if the machine/robot sheds its skin from being just the idiot savant for predetermined instructions and begins to have the capacity to sense, react and act in return based on material value? Such a process proposes an understanding of the material not merely as a passive recipient of predefined shape, the machine not only the executor of commands, the human not only the operator, the working environment not merely the setting.

We will focus on the shift from instruction based making to behavior based making, and how the process of virtual construct to physical artifact ought to be. This is a novel approach in order to overcome the inherent hierarchy of established design processes that always prioritized the definition of form over the material realization process. We apprize this novel point of convergence of computational form generation and computer aided materialization through the investigation of some early examples.

Taking full advantage of computation in architecture requires a shift from computerized to computational design, and this is merely the beginning. In the physical realm, this definition should also be extended towards a change from computerized to computational making1 as Achim Menges has coined or embodied computation2 as Axel Kilian has coined. Menges believes that even advanced digital fabrication in architecture takes the direct translation from fully defined design to material manifestation for granted, remaining within the realm of computerized making. In contrast, truly computational making entails the unfolding of an explorative process of materialization driven by cyber/physical feedback, which extends the design process rather than merely conducting it. In parallel, Axel Kilian uses the term embodied computation to offer the possibility to shift part of the execution of formal manipulation from top-down process of manipulating material in a static manner towards one where the robotic or human actions are only part of the operation and changes are triggered by the action to complete the process.

We love our “orange”, but we have to accept the truth as is: it is an idiot savant. Even though it excels at tasks given specific – especially if it involves relying on memory, it is not that adaptable in social environments where humans are present. It works better alone, or with similar robots that share the same, unique condition. Rather than relying solely on a robot similar to our “orange”, a newer generation of robot with more advanced capabilities (and better yet, in a smarter setting) is more suitable to undertake the task of understanding what is going on in the fabrication process. Marketed as IIWA: intelligent industrial work assistant, this new breed of robots are integrated with force-torque sensors. In most of the recent Industry 4.0 robot advertisements, we see them stopping when they accidentally touch a person, or we see them learning (or rather merely repeating) a path they are guided by hand. Yet, the possibilities are far more exciting rather than building a co-working environment for robots and people, or making a mechanical parrot out of your robot. It allows the robot to be embodied in a setting, acquiring cognitive aspects of the space as well as the material just like a craftsman. Prospects for applications in architecture are exciting as the phenomena renders the use of robots as viable and furthermore - essential.

Team DIANA (dynamic & interactive assistant for novel applications) from RWTH Aachen University’s Individualized Production in Architecture proposes using robots as dynamic assistants in an unstructured environment such as a construction site. The construction site can be deemed as the ultimate stress test for universal applicability of robots. Due to the low degree of expertise within the workforce, the utilization of the robot in the construction site should not require a technical background of the user in order to be able to use, calibrate and re-program a robot for construction tasks. As such, they proposed to redefine the teaching process through guided teaching. The robotic arm interacts on a haptic and visual level with its human coworker. The user grasps the IIWA and manually guides it through several tasks, as it captures the actions of the user and becomes informed about the fabrication process. In one case study, the robotic arm take a wooden rod from the supply station, calculates the length of the rod required for a particular section and cuts it off at the desired length using a circular saw. The robot moves the rod into the approximate position as informed from the digital model and seeks for the exact position via the integrated force sensors. Just like the way we rely on our hands when there is a sudden blackout and we cannot see anything, the entire action is not the outcome of sets of predetermined instructions but is the outcome of guided haptic teaching.

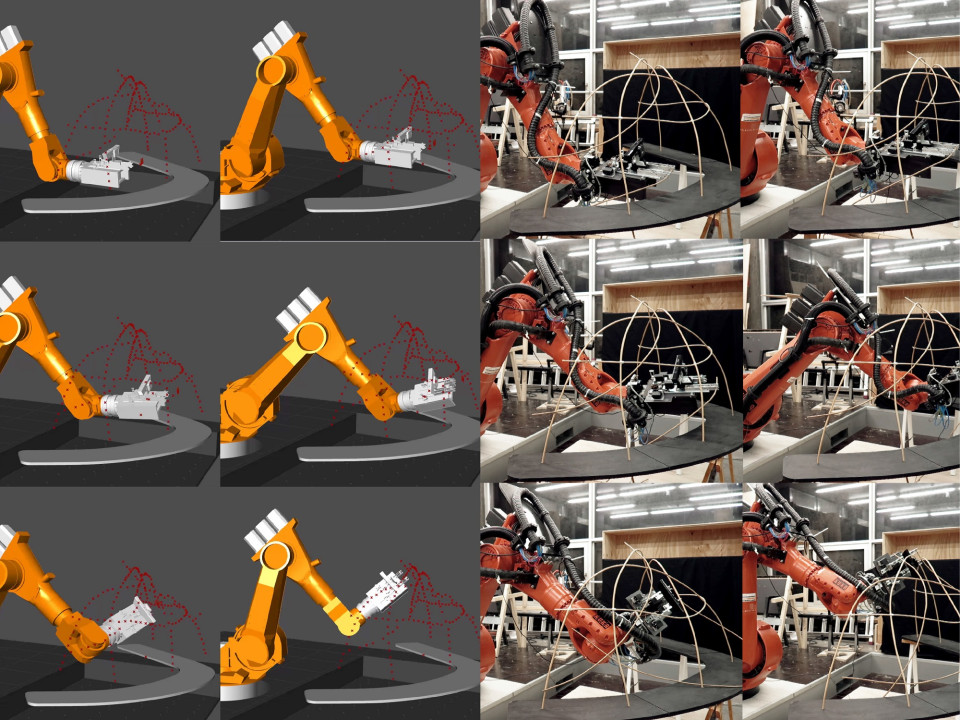

Another project we are going to cover is a master’s thesis conducted in ICD. Not everyone’s going to have an IIWA at their disposal. “Orange” like robots can be augmented with sensors and real-time data transmission. Giulio Brugnaro’s Robotic Softness – Behavioral Fabrication Process of a Woven Space3, investigates behavioral construction strategies for architectural production that utilizes robotic fabrication processes not as linear procedures of materialization but rather as a “soft system”4. A term coined by Sanford Kwinter, it refers to a system that is flexible, adaptable and evolving, which relies on a dense network of active information and feedback loops. The main components of the design and fabrication process are an adaptive robotic fabrication framework composed of an online agent-based system, a custom weaving end-effector and a coordinated sensing strategy utilizing 3D scanning. Such integration opens up the possibility for the design to unfold simultaneously with fabrication, informed by a constant flow of sensory information. Instead of relying on explicit instructions and predictive modeling, the digital model updates itself simultaneously as raw point cloud data is retrieved from the physical model. As seen in the image, the soft system is composed of two coordinated feedback loops, one acting on a local level while the other on a global one, unfolding together in real-time. This updates the prototype in digital medium after each manipulation as it is modified through the different physical properties of each stick installed.

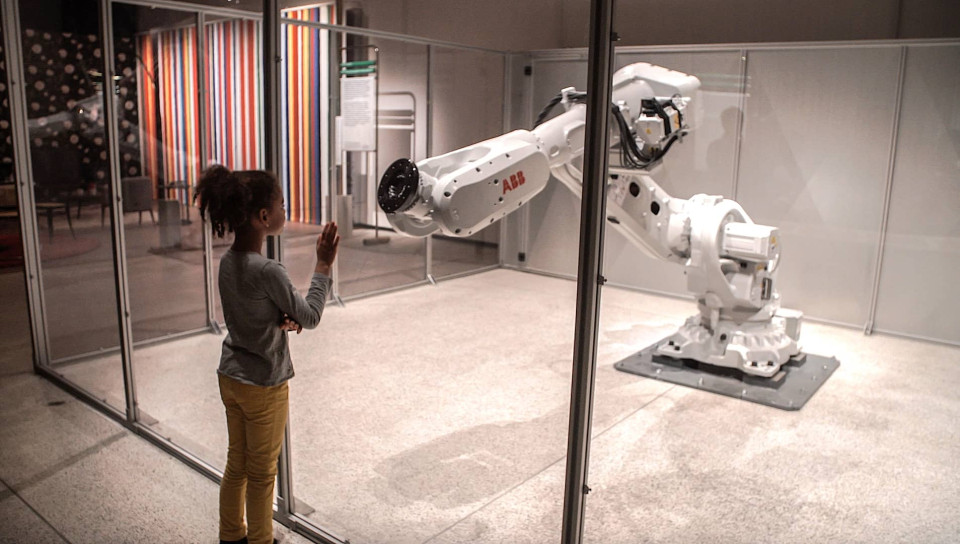

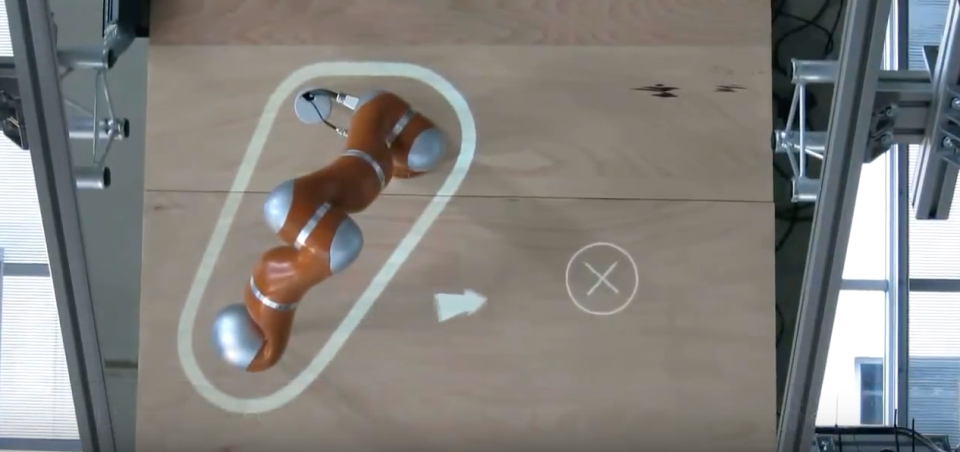

The final project we want to mention is EXECELL5- Experimental Evaluation of Advanced Sensor-Based Supervision and Work Cell Integration Strategies, from Echord on the cross-section of the Human-Robot Interaction research supported by the European Union. It performs different actions based on what it observes in the volume equipped with sensors that detect people and other objects in three dimensions under various conditions. Using an algorithm for dynamic, online planning and monitoring of safety volumes, the system identifies security zones taking the robotic arm’s motion into account and determines if the robot should react at a lower speed or stop completely. Via the help of a projector located on top of the working environment, the system informs the robot arm’s trajectory by projecting it onto the collaborative working environment.

Design and fabrication processes are not separate, but one. What we see in the horizon is an alternative approach for production where the design process does not act as a predecessor to fabrication, but extends into materialization. Instead of sequential progressions with no interactions in between, we should embrace explorative and experimental processes where one does not overshadow the other, as they are non-linear. Speaking of explorative processes, who is up for collaboration? Let us equip them with the necessary sensorial devices and ourselves with the coding experience, to free them out of their cages for a better interaction.

Notes

*Per usual, we love to reference things: “How to Train Your Dragon”

1 Menges, Achim. "The New Cyber‐Physical Making in Architecture: Computational Construction." Architectural Design 85.5 (2015): 28-33.

2 Johns, Ryan Luke, Axel Kilian, and Nicholas Foley. "Design approaches through augmented materiality and embodied computation." Robotic Fabrication in Architecture, Art and Design 2014. Springer International Publishing, 2014. 319-332.

3 Brugnaro, Giulio; Baharlou, Ehsan; Vasey, Lauren; Menges, Achim. 2016. Robotic Softness: An Adaptive Robotic Fabrication Process for Woven Structures. In "ACADIA // 2016: POSTHUMAN FRONTIERS: Data, Designers, and Cognitive Machines [Proceedings of the 36th Annual Conference of the Association for Computer Aided Design in Architecture (ACADIA)": pp. 154-163.

4 Kwinter, Sanford. "Soft systems." Culture Lab 1 (1993): 208-227.

5 http://www.echord.info/wikis/website/execell.html , EXECELL-Experimental Evaluation of Advanced Sensor-Based Supervision and Work Cell Integration Strategies

Related Content:

-

Design Education in the Age of Artificial Intelligence

Bilge Bal and Bahar Avanoğlu talked with Mario Carpo on the first year design studios as the foundation of architectural education and the role of computational design tools in the field.

-

Architecture Ablaze

'If cold, then cold as a block of ice. If hot, then hot as a blazing wing. Architecture must blaze.'

-

Does 'Orange' Dream of Electric Bricks?

Recently, we are very much exposed to robotically fabricated pavilions popping up all around the world, most of which are iterations of the applied research projects started a decade ago.

-

void main( )

A series of futuristic images, with the first series produced for the 1900 Exhibition Universelle in Paris, was depicting what it would be like to live in the then distant year of 2000 - aptly named En L’an 2000

-

private class Architect { }

Alberti's approach in design fabrication is still a major part of another issue, but rather lets us focus on how his conception of the architect fares in pre-Industrial Revolution

-

Jenny Sabin Studio Selected as Winner of the MoMA PS1 2017 Young Architects Program

-

helloWorld

'Who are we as architects?' we found ourselves pondering, across a half-a-ton bright orange robotic arm looming over the very little space we have as our digital fabrication workshop

31.10.2017

31.10.2017